What is the technological singularity?

"Where the ENIAC is equipped with 18,000 vacuum tubes and weighs 30 tons, computers in the future may have only 1,000 vacuum tubes and weigh only 1 1/2 tons."

- Anon, 'Popular Mechanics', March, 1949

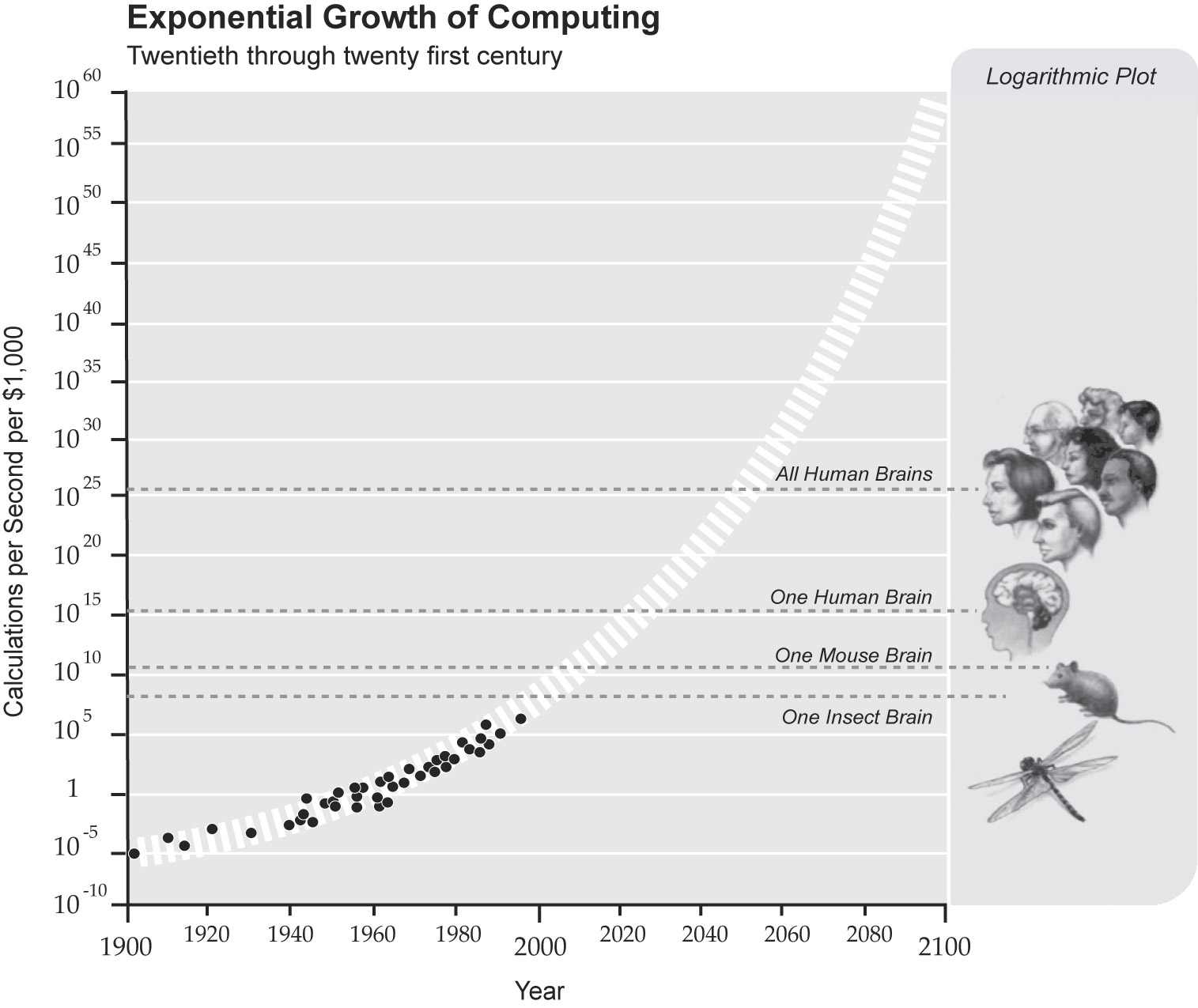

Most people are familiar with Moore's law, that computer chip performance will double every 18-24 months, in some form or another, but few reflect on the implications of this. If we assume that current trends continue, we can extrapolate a few rough predictions:

2020 - A personal computer with the processing power of the human brain will be in existence

2045 - A personal computer with the processing power of every human brain on the planet will be in existence

The inventor and futurist Ray Kurzweil has postulated that at some point in the future, a technological 'singularity' will occur, similar to the singularity at the centre of a black hole predicted by physics. This is the point at which technological progress, if plotted on a graph, essentially becomes a vertical line. But how would this suddenly occur?

Kurzweil predicts that once a computer is created with the processing power of the human brain (the hardware of the brain), it is possible to program the computer to think like we do by replicating the neuronal network in our own brains into the circuitry of the computer (the software of the brain). We then have a computer that, for all intents and purposes, is the same as our own brains. Would it be able to talk to us, feel, and think original as we do? Probably, yes. But would it be conscious? Would it be aware of its own existence? Well that's a topic for another day...

Now, imagine that a year or two after this first computer 'brain' is created, computer have again roughly doubled in power. A second computer is now created, but this one is twice as powerful as our own brain. This gives it twice the ability to reason, to think originally, to imagine and to create. It would be able to make new scientific discoveries, create new technologies or improve existing ones, and most importantly improve itself twice as well as a human can. We might then leave the computer to build a third 'brain' a little later which is even more capable of improving upon itself, and so on.

This becomes a self-reinforcing loop, with greater and greater progress made with shorter and shorter gaps. Within perhaps only a few months more progress is made than in the last 100 years. Within as little as a few years the ultimate potential of matter to compute (which is about 10^42 operations per second for 1kg of matter - 10 trillion times more powerful than all human brains on earth!) could be reached.

Whence singularity?

"You will know the Singularity is coming when you have a million e-mails in your in-box"

- Ray Kurzweil, 'The Singularity is Near'

Of course this might never happen, or not for thousands of years. There could be some fundamental problem in mapping the network of neurons into a computer, or there could be some currently unknown, unique element to our brains that cannot be replicated in a machine, or we may simply end up destroying ourselves before computers can become powerful enough (more likely than you think...)

But, if it is possible, the estimated time of the technological singularity varies. Ray Kurzweil puts the year as 2045, others put it earlier and some later. Personally, I think Kurzweil's got it about right (although I am a little sceptical that the singularity will even happen). Even if there are major problems in achieving the software of the human brain, the hardware should already be more than good enough by this time.

So what?

"Two billion years ago, our ancestors were microbes; a half-billion years ago, fish, a hundred million years ago, something like mice; then million years ago, arboreal apes; and a million years ago, proto-humans puzzling out the taming of fire. Our evolutionary lineage is marked by mastery of change. In our time, the pace is quickening."

- Carl Sagan, 'Pale Blue Dot: A Vision of the Human Future in Space'

So we end up with more and more powerful computer, but what use is that? (apart from letting you run Crysis at 100FPS [I bet no one got that joke...]) Well, as I hinted at earlier, computers aren't just good at making better computers, but can allow progress in every field of science and technology - genetics, nanotechnology, robotics, medicine, space exploration, food and energy production, and countless more. the singularity would be the defining point in the whole of human history, after which everything will be different. Just to give a handful of the technologies that could rapidly develop:

- Nanobots (robots on the nanoscopic scale) that can patrol the body to destroy pathogens and repair damaged cells

- Nanobots (robots on the nanoscopic scale) that can patrol the body to destroy pathogens and repair damaged cells- Advances in propulsion that would allow interstellar travel

- Full immersion virtual reality indistinguishable from real-life

- Advances in genetics and medicine that could allow you to prolong your life for hundreds of years

- Technologies that could reverse environmental damage, end poverty and provide a more than comfortable lifestyle for every human being

Perhaps the most important development, and the one that Ray Kurzweil sees as necessary, is the merger of humanity with technology. He argues that in order to even comprehend the immense change caused by the singularity, humans must amplify their own intelligence with that of the computer, even up to the point of 'uploading' your mind into a computer. This sounds like a scary prospect for most (including myself to an extent!), but would allow immortality to all extents and purposes.

It is well worth pondering the technological singularity - whether you think there is any chance of it occurring in the foreseeable future, and its implications on your life and view of the world.

The dangers of technology

"I think that their flight from and hatred of technology is self-defeating. The Buddha rests quite as comfortably in the circuits of a digital computer of the gears of a cycle transmission as he does at the top of a mountain or in the petals of a flower. To think otherwise is to demean the Buddha - which is to demean oneself."

- Robert M. Pirsig, 'Zen and the Art of Motorcycle Maintenance'

There are a plethora of dangers from technology, many of them existential. Already we are able to wipe out most of life on the planet with nuclear weapons, but the technology of the future could give us more efficient methods. Nanobots could be programmed to replicate indefinitely, consuming the entire planet, along with ourselves in the infamous 'grey goo' scenario (click the link if you want a good scare). Deadly, untreatable viruses could be engineered to wipe out large portions of humanity. There's always the danger of technology popularised in the 'Terminator' series, of a malicious artificial intelligence developing intent on the destruction of mankind, but this is unlikely since the computer would be based on our own brain, and most of us don't really want to utterly destroy our own species (if you do, please seek help).

There is also a fair chance of the emergence of a violent, unscrupulous neo-luddite or anarcho-primitivist movement, opposed to the advance of science and technology, and advocating a return to a 'simpler' way of living. Perhaps through the ironic use of highly destructive technology they might carry out devastating acts of terrorism to further their cause.

Finally there is perhaps the greatest danger of the exponential rise of the machines. Do, by allowing technology to do everything for us, and by becoming drawn into the world of the computer, we lose something of what makes us humans. Might we become to technology what technology is currently to us - in other words, the transition from a world of humans that happens to contain machines, to a world of machines that happens to contain humans.

Finally there is perhaps the greatest danger of the exponential rise of the machines. Do, by allowing technology to do everything for us, and by becoming drawn into the world of the computer, we lose something of what makes us humans. Might we become to technology what technology is currently to us - in other words, the transition from a world of humans that happens to contain machines, to a world of machines that happens to contain humans.Conclusion

"The most common of all follies is to believe passionately in the palpably not true"

- H. L. Mencken

Ultimately, the singularity might happen in 20 years, in a thousands years or never at all. Whichever turns out to be true, it is important that we do not become complacent that it will happen and negate the world and its people today, safe in the knowledge that technology will save us all in time. It is equally important that we do not assume that the singularity will not occur, and fail to prepare ourselves and society for the profound effects of rapidly progressing technology, much of which may pose existential threats to our species.

Well a 600-page book could be written about the singularity, and in fact in has. If you're interested in anything brought up here, I highly recommend Ray Kurzweil's 'The Singularity is Near'. Some of it is heavy reading, but it's well worth it. I hope you've enjoyed the first non-introductory post written by myself, and would be delighted to hear any criticisms you have of the format or content of my posts.

- Daniel

Wow, that's pretty cool. This was fun to read and well written. :)

ReplyDeleteWow! Even I found it intersting. Well done. :)

ReplyDeleteI think the 'singularity' proponents place too much emphasis on an ill-defined notion of computing 'power' and then apply some very rudimentary arithmetic to some very simplistic assumptions to derive their conclusions. The fundamental issues limiting computing 'power' that we face today have a lot to do with basic physics (e.g. thermal dissipation, managing concurrency, connectivity, storage capacity and informational bandwidth) and less to do with speed of operation. Similarly, one of the great features of increasing computing bandwidth and speed that is commonly overlooked by 'futurists' is the counterbalancing force of de-optimisation of performance. That is, most of the software people encounter in their everyday life is performing suboptimally because the people who pay for it to be developed don't care as much for the performance of the system as they do for the cost of development. Hence a lot of software is developed in high-level meta-languages that run on virtual machines that are themselves sub-optimal implementations in high-level languages on top of generic processing assumptions (e.g. Java). It may be easy to write functionally useful applications, but they are inherently inefficient by the very nature of their implementation - precisely because our reliance on Moore's Law allows us to assume that an acceptably slow application today will run twice as fast in the future without a single line of code being changed. Except we actually add more code all the time :-)

ReplyDeleteThe other point is that code does not write itself (except in very special circumstances which are too esoteric to go into here), so the singularity won't occur until processors are freed from the limitations of human programmers and, more tellingly, the limitations of those organisations that pay human programmers.

In essence, until computers start doing things we cannot explain (note: they often do things we can't anticipate, but that's our limitation not their strength), no matter how much processing 'power' we give them, they won't represent a comparable intelligence. When a computer decides, of it's own free will, to write a novel or create an image or some other act of irrational and spontaneous creativity, for it's own amusement, then I'll believe it has some sort of intelligence. Until then it's just a tool, and right at the bottom, quite a simple tool in the Von Neumann architectures we currently implement. If you program in assembly language you'll know precisely how simple. Most processor ALUs can perform basic bitwise logical operations (OR, AND, NOT, etc), simple arithmetic (add and subtract - multiply and divide are comparatively expensive) and bitwise register shift operations. Complexity does arise from simple rules, but human programmers have yet to release the humble CPU to explore the massively multi-dimensional solution space that could establish the underlying rules that would allow intelligence to spontaneously emerge. At the moment, computer intelligence is limited by human inability to understand human intelligence :-)

BTW - Moore's Law is more of a self-fulfilling side effect, since semiconductor companies have been using it as a forecasting yardstick for some time. Moroe's Law appears to apply to research and development precisely because research and development targets use Moore's Law to plan forward :-)

ReplyDeleteTechnically Moore's Law only applies to the number of transistors that can be placed economically on an integrated circuit, which is a very precise definition and not as loose as 'computing power'. It is also anticipated that Morre's Law will shortly lose it's applicability as certain anticipated physical limits kick in over the next 3-5 years.

People who quote Morre's Law often omit to mention Moore's Second Law: the cost of semiconductor fabrication also increases exponentially over time :-)

I can spell Moore's Law, by the way, but I'm a crap typist :-)

ReplyDeleteThat's precisely the reason that I'm skeptical. However, there are some really promising projects attempting to replicate the human brain down to the molecular level that have had considerable success (the Blue Brain Project for one). They reckon it's just a matter of funding, not limitations in technology or science, but I suppose we will just have to wait and see :P

ReplyDeleteThis is really really interesting! And I got the crysis bit ;)

ReplyDelete